Standard tools miss STR expansions

CommentsSummary: STR expansions, while theoretically possible to detect from sequencing data, are still not accurately genotyped by standard tools.

Background

Recently we and others have made a ton of progress in tackling the bioinformatic challenge of genotyping short tandem repeats (STRs) from high throughput sequencing data (see lobSTR.) However, our and other tools are only able to genotype repeats that are short enough to be completely spanned by sequencing reads, limiting us to STRs of less than ~80-90bp, and excluding the ability to type large expansions such as those seen in Huntington's Disease or Fragile X Syndrome. Furthermore, alignment advances (e.g. bwa mem) are becoming more and more indel-sensitive, and new genotypers (e.g. GATK HaplotypeCaller) are using more sensitive local assembly approaches that could potentially used for genotyping long STRs.

Here I evaluate how these tools perform at a long repeat, using the Huntington's CAG repeat as an example. The hg19 reference genome has 19 copies of CAG (at chr4:3076603). Under 26 repeats is considered normal, and full mutations have 40 or more repeats. Normal and low end pathogenic mutations should be spanned by short reads (here I use 150bp paired end reads to be generous). I use a range of normal, short full, and long full mutations to test how bwa mem and GATK perform at these regions.

The short answer is that in the easiest cases of short alleles that can be fully spanned by a single read, they do a decent job. However sequencing errors and longer alleles (even those that are spannable) pose significant problems and give misleading results.

Simulating reads

First, I created a reference fasta file containing different Huntington's alleles (chr4:3076603-3076660 +/- 1000bp):>HTT_ref_19_normal

...(CAG)19...

>HTT_20_normal

...(CAG)20...

>HTT_25_normal

...(CAG)25...

>HTT_40_full

...(CAG)40...

>HTT_60_full

...(CAG)60...wgsim (https://github.com/lh3/wgsim) which I used to simulate reads with and without errors:

# simulate paired end reads - no errors

wgsim \

-1 150 -2 150 -N 5000 -e 0.0 -r 0.0 \

huntington_expansion_multalleles.fa \

huntington_expansion_multalleles.read1.fq \

huntington_expansion_multalleles.read2.fq

# simulate paired end reads - with errors

wgsim \

-1 150 -2 150 -N 5000 -e 0.02 -r 0.0 \

huntington_expansion_multalleles.fa \

huntington_expansion_multalleles.err0.02.read1.fq \

huntington_expansion_multalleles.err0.02.read2.fqAligning with bwa mem

Next I aligned reads using bwa mem (see https://github.com/lh3/bwa). The full script is below:#!/bin/bash

alleles="HTT_ref_19_normal HTT_20_normal HTT_25_normal HTT_40_full HTT_60_full"

for allele in $alleles

do

# get fq

grep -A 3 "^@$allele" huntington_expansion_multalleles.read1.fq > huntington_expansion_multalleles.$allele.read1.fq

grep -A 3 "^@$allele" huntington_expansion_multalleles.read2.fq > huntington_expansion_multalleles.$allele.read2.fq

grep -A 3 "^@$allele" huntington_expansion_multalleles.err0.02.read1.fq > huntington_expansion_multalleles.$allele.err0.02.read1.fq

grep -A 3 "^@$allele" huntington_expansion_multalleles.err0.02.read2.fq > huntington_expansion_multalleles.$allele.err0.02.read2.fq

# run bwamem

bwa mem \

-R "@RG\tID:$allele\tSM:$allele" \

/Users/gymrek/resources/genomes/b37/human_g1k_v37.fasta \

huntington_expansion_multalleles.$allele.read1.fq \

huntington_expansion_multalleles.$allele.read2.fq > \

huntington_expansion_multalleles.$allele.sam

bwa mem \

-R "@RG\tID:$allele.err\tSM:$allele.err" \

/Users/gymrek/resources/genomes/b37/human_g1k_v37.fasta \

huntington_expansion_multalleles.$allele.err0.02.read1.fq \

huntington_expansion_multalleles.$allele.err0.02.read2.fq > \

huntington_expansion_multalleles.$allele.err0.02.sam

samtools view -bS huntington_expansion_multalleles.$allele.sam > huntington_expansion_multalleles.$allele.bam

samtools view -bS huntington_expansion_multalleles.$allele.err0.02.sam > huntington_expansion_multalleles.$allele.err0.02.bam

samtools sort huntington_expansion_multalleles.$allele.bam huntington_expansion_multalleles.$allele.sorted

samtools sort huntington_expansion_multalleles.$allele.err0.02.bam huntington_expansion_multalleles.$allele.err0.02.sorted

samtools index huntington_expansion_multalleles.$allele.sorted.bam

samtools index huntington_expansion_multalleles.$allele.err0.02.sorted.bam

rm huntington_expansion_multalleles.$allele.sam

rm huntington_expansion_multalleles.$allele.bam

rm huntington_expansion_multalleles.$allele.err0.02.sam

rm huntington_expansion_multalleles.$allele.err0.02.bam

done

samtools merge -f huntington_expansion_all.bam $(ls huntington_expansion_multalleles*.bam)

samtools index huntington_expansion_all.bamTo visualize the results, I used my personal favorite alignment viewer, PyBamView :). I used the command:

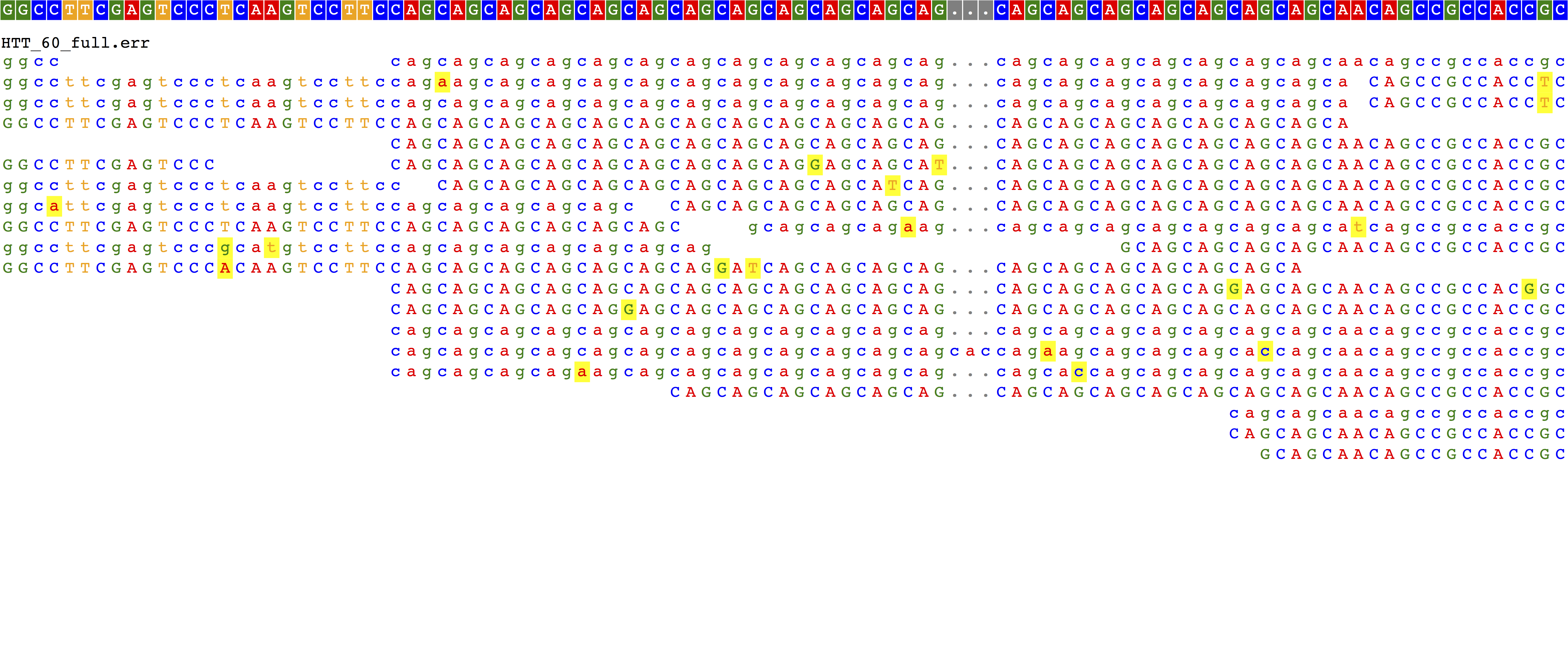

pybamview --ref human_g1k_v37.fasta --bamdir . --downsample 20- Reference allele (19xCAG)

- High normal range (25xCAG)

- High normal range (25xCAG) shown without sequencing errors

- Disease allele that can be spanned by 150bp reads (40xCAG)

- Disease allele that can't be spanned by 150bp reads (60xCAG)

The reference case looks good. We see reads completely spanning the 19xCAG repeat and no evidence of insertion or deletion. 25xCAG with sequencing errors looks pretty messy, but we do see evidence for a 6xCAG insertion for each read that spans the repeat region.

40xCAG, which is in the full mutation range, starts to look not as expected. Even though some reads completely span the repeat (repeat region is 120bp and we have 150bp reads), glancing at the alignment shows something that looks completely like the reference. Same deal for the 60xCAG repeat, which is too long for us to span, but which we would expect to show some evidence of insertion. What's going on with those?

Taking a closer look at an example read that should have shown evidence of insertion:

HTT_40_full_981_1469_0:0:0_0:0:0_222 99 4 3076584 4 79M71S = 3076860 426 TCGAGTCCCTCAAGTCCTTCCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAACAGCCGC IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII NM:i:0 MD:Z:79 AS:i:79 XS:i:76 RG:Z:HTT_40_full SA:Z:18,53253385,+,60S76M14S,4,0; XA:Z:18,+53253385,27S76M47S,0;18,+53253385,21S76M53S,0;16,-73580562,56S74M20S,1;X,+66765160,20S68M62S,0;17,-10899030,67S64M19S,0;

HTT_40_full_981_1469_0:0:0_0:0:0_222 147 4 3076860 60 150M = 3076584 -426 GCTACGGCGGGGATGGCGGTAACCCTGCAGCCTGCGGGCCGGCGACACGAACCCCCGGCCCCGCAGAGACAGAGTGACCCAGCAACCCAGAGCCCATGAGGGACACCCGCCCCCTCCTGGGGCGAGGCCTTCCCCCACTTCAGCCCCGCT IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII NM:i:0 MD:Z:150 AS:i:150 XS:i:0 RG:Z:HTT_40_full

HTT_40_full_981_1469_0:0:0_0:0:0_222 2147 18 53253385 4 60H76M14H 4 3076860 0 AGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCA IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII NM:i:0 MD:Z:76 AS:i:76 XS:i:70 RG:Z:HTT_40_full SA:Z:4,3076584,+,79M71S,4,0;

- A read that should have spanned the repeat instead gets soft clipped, with a CIGAR of "79M71S", making it appear to match the reference. Taking a closer look at the

bwa memscoring suggests the answer: a tradeoff between penalties for clipping ends (5) and for gap extension (1). (although I'm not sure exactly how the clipping penalty works, e.g. is it per-bp? same for soft vs. hard?). For these cases, clipping the alignment produces a higher alignment score than including a 63bp or more insertion, and so is favored. Setting-L 1000results in alignments favoring the expansion (not shown). This is not something BWA is doing wrong, it is just scoring like we told it to. But whether or not this parameter is set does have implications for STR calling (see conclusion for more discussion). - Besides the alignment of the two pairs, there is a third alignment to chromosome 18, consisting of a perfect copy of the repeat that has been hard-clipped out of the original read. The flag 2147 indicates this is a "supplementary alignment" (see explain-flags), which is used to show evidence of a chimeric read but is kind of bizarre in this case. I think this alignment will be ignored by standard tools.

Calling variants with GATK HaplotypeCaller

I thought I'd give GATK's haplotypecaller a go at picking up some of these expansions. Since it performs local reassembly of the reads, maybe it will fix the clipping issues.#!/bin/bash

REF=/Users/gymrek/resources/genomes/b37/human_g1k_v37.fasta

# indel realignment

java -jar /Users/gymrek/sources/GenomeAnalysisTK.jar \

-T RealignerTargetCreator \

-R $REF \

-I huntington_expansion_all.bam \

-o realignment_targets.list

java -jar /Users/gymrek/sources/GenomeAnalysisTK.jar \

-T IndelRealigner \

-R $REF \

-I huntington_expansion_all.bam \

-targetIntervals realignment_targets.list \

-o huntington_expansion_all_realigned.bam

# haplotypecaller

java -jar /Users/gymrek/sources/GenomeAnalysisTK.jar \

-T HaplotypeCaller \

-R $REF \

-I huntington_expansion_all_realigned.bam \

-o huntington_expansion_hc.vcf \

-bamout huntington_expansion_hc.bamout.bam4 3076603 . C CCAG,CCAGCAGCAGCAGCAGCAG,CCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAGCAG- HTT_19_ref_normal: 0/0 (19xCAG)

- HTT_19_ref_normal.err: ./. (nocall)

- HTT_20_normal: 1/1 (20xCAG)

- HTT_20_normal.err: ./. (nocall)

- HTT_25_normal: 2/2 (25xCAG)

- HTT_25_normal.err: ./. (nocall)

- HTT_40_full: 3/3 (40xCAG)

- HTT_40_full.err: ./. (nocall)

- HTT_60_full: 3/3 (40xCAG)

- HTT_60_full.err: ./. (nocall)

- For repeat numbers 19-40, without sequencing errors, HC actually gets it right. This should have been expected, as HC reassembles the input reads, so shouldn't be confused by all the soft clipping from bwa mem.

- In all cases samples that had sequencing errors ended up with no genotype call. I'm guessing this is because the alignments at the repeat region turned out to be too messy so the HC gave up, but will need to investigate this further.

- For the case of 60 repeats, which cannot be spanned by a single read, we surprisingly got a call of 40xCAG. Perhaps of all the haplotypes that HC was able to assemble and consider, this was closest. Although, it also reported 40xCAG for the case of a simulated 45xCAG expansion (not shown). Perhaps there is a max indel size being considered? this GATK forum page says a rule of thumb is it can pick up indels about half the read length. I'm not sure if that means there is a strict cutoff.

Conclusion

In conclusion, it seems like standard tools still aren't quite ready to accurately pick up large STR expansions, even those that can be spanned by short reads. These reads are probably an easy case, as I only simulated base mismatch errors, and made no attempt to simulate PCR stutter, the main source of noise at STRs.

Note I only tested one genotyper, so perhaps others would have behaved differently and are worth trying. I also didn't show our own lobSTR's results here, but as expected we seem to be strongly biased toward calling deletions from the reference, which is exacerbated by using bwa mem alignments that have been soft clipped as input.

One important point that arose from this is the tendency of bwa mem with default parameters to trim reads in favor of large insertions or deletions at repeats. Perhaps this scoring behavior makes sense at non-repeat regions, but it could make sense to use repeat-region-specific penalties that do not penalize insertions or deletions of the repeat unit. Importantly, this probably means there is probably a maximum insertion size that downstream callers (e.g. our lobSTR) will be strongly affected by and should be aware of. This will create a strong bias toward calls that are deletions vs. insertions from the reference, which we have actually been seeing using existing bwa mem alignments.

Tweet comments powered by Disqus